OHSHIT REPORT: 41 days of clickstream data lost

23When something goes terribly wrong here at Mediocre we draft up internal memos called “OHSHIT REPORTS”. We use OHSHIT REPORTS to help share details across the company about what went wrong, coordinate work across teams for how we’re going to fix it, and dive deep into root causes.

Overview

A combination of a server failure, a misconfiguration, and a flaw in our backup plan resulted in the loss of 41 days of clickstream data (real-time data about pageviews on our websites).

Keep in mind, this data loss does not affect any account data in any way. All meh button clicks, forum comments, and orders are stored in a different database with much more redundancy.

We thought we’d share this OHSHIT REPORT with our community to explain some missing stats on old deal pages.

Clickstream data?

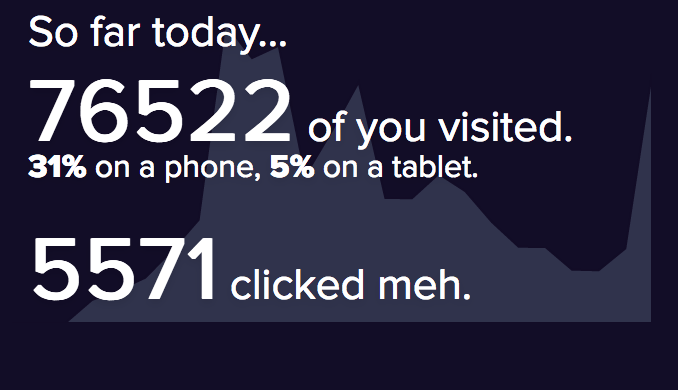

We use clickstream data to power the traffic stats we share publicly on the meh.com homepage. We’ve talked before about the technology behind the realtime referral stats graph and how we store clickstream data in InfluxDB.

Wait, didn’t we go through this once already?

Yes, we lost 3 days of clickstream data back in May 2015 when we we tried to upgrade InfluxDB from v0.7.3 to v0.8.8.

Okay, so what’d you do this time?

In March 2016, we were close to running out of disk space on our InfluxDB server hosted in Microsoft Azure. We started looking for a new home for the disk to live where it would have room to grow. Lucky for us, or so we thought, there was already another disk attached to our server that we could migrate our data to.

To understand why we’d think this disk was safe, you need to understand how file systems conventionally work in Linux. Windows, as you may know, assigns a letter to every drive that’s in your computer. Typically, C: is the drive containing Windows, and any other drives, such as USB flash sticks or CD drives, get lettered upward from there.

Linux works differently. A drive shows up in your system like any other folder, and can even show up as a folder contained in another drive. A file called fstab, which stands for File Systems TABle, controlls this. You can look up a good example. Ours was much simpler than most: one device mounted at /, and another mounted at /mnt.

Typically, /mnt is a catch-all for disks other than the one your operating system is on. For instance, a CD drive usually shows up at /mnt/cd-rom. This is generally only for disks without a specific purpose. One common practice, for instance, is to store the /home directory on its own device, since it plays host to frequently-accessed user data. Our drive wasn’t mounted at /tmp, the standard temporary directory, nor was it living at /mnt/removable-storage or something similar. We thought it was a good place to store our data.

We were wrong.

It turns out that the “sdb” (storage disk b) device on an Azure virtual machine is in no way connected to the “virtual hard drive” connected to the virtual machine (Cue /image Virtual Boy). It is meant to be temporary storage because it is a link to an actual, physical hard drive connected to an actual, physical computer in a Microsoft data center. If your virtual machine gets moved, you lose any data that was on that drive.

There is a warning on these drives called “DATALOSS_WARNING_README” that lets you know this, but our research indicates that this was added sometime near the beginning of November 2014, about 5 months after we set up our virtual machine.

The incident.

At 5:10 P.M. on January 5 2017, Azure began rebooting all our virtual machines. There is no way for us to know for sure, but this was likely for performing hardware maintenance, moving our virtual machines to a different physical server. When the reboot completed, we discovered that we lost access to all the data we had stored in /mnt, which included all clickstream data since we began using the drive in March 2016. Restoring the most recent backup uncovered that this drive was not included in our backups, which only captured snapshots of the operating system disk.

Fortunately, in preparation for an upgrade, we had already copied all our pageview data through November 25, 2016 into a separate instance of InfluxDB v1.1. By executing the switch to this new instance early, we were able to limit the range of data loss to a range from November 25, 2016 at 12:12 P.M. to January 6, 2017 at 1 A.M., just over 41 days. Without this, the data loss would have spanned 249 days.

The impact.

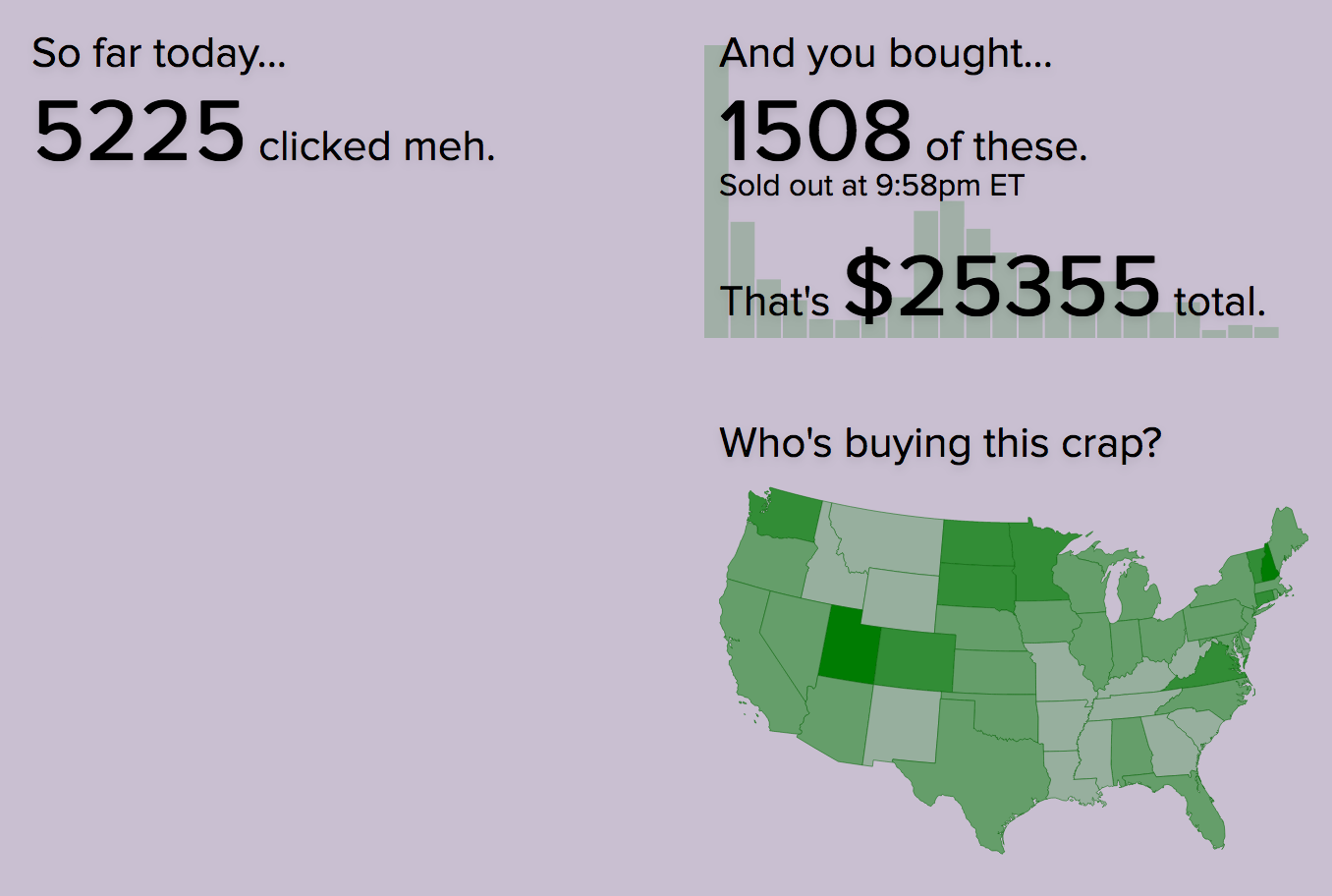

Any deal archive pages between 11/26/2016 and 1/5/2017, such as the December 26 Haribo Gold Gummi Bear deal are permanently missing traffic stats. It leaves a big blank space in the bottom left here:

The stats on the days right on the edges of the affected period are not completely missing, but are missing chunks of time. You can see this if you look at the pageview graphs for the Canon T6 deal and the Life Gear Pro CREE LED Flashlight:

How will we prevent this from happening again in the future?

We’re no longer managing our own InfluxDB servers. We have migrated our clickstream data to InfluxCloud, where we pay the team that builds InfluxDB to keep an eye on our cluster 24/7 to make sure it’s running smoothly. InfluxCloud also includes hourly backups in case we encounter another disaster.

Want to help us?

We have several positions open on our engineering team, including a DevOps Engineer. If you think you’d like setting up servers and fixing backup plans with a friendly group of oddballs, you should take a look at our job listings.

- 15 comments, 20 replies

- Comment

Row row row your

@snapster

gently down shit stream.

merrily merrily merrily merrily,

data’s but a dream.

@harrison you had me at “All meh button clicks… are stored in a different database with much more redundancy.”

@carl669 That was the only part I cared about too.

@carl669 Same here.

Tho my thinking was more along the lines of “OH! OH! OH! If they lost the meh clicks and just give everyone those days as clicked, my streak would be up over 400!”

Alas, 'twas not to be and thus I’m at 46 for today.

So @shawn… how many pizzas and with what toppings for that click?

/giphy haha

I have so many questions before I let you hire me.

1- Do I have to know how to do computer stuff?

2- Do I have to be sober?

3- Who buys the beer I require?

4- I hope there is no actual tracking of ‘time worked’.

5- Would I have to show up and do something (other than drink)?

6- Do you supply the pizzas & tacos?

Eagerly awaiting your reply… Dave.

@daveinwarsh they already have a Dave working there though.

@carl669 That’s OK. You can’t have too many Daves.

@daveinwarsh

True story: nearly every class in my two years in college, along with 1st, 2nd, and 4th grades, and a few years of church camp had at least two other Davids.

Then a job I recently lost (contract expired), had at least three other Davids working there.

Is that too many? Well, I haven’t run out of alphabet letters, but I have used a few twice.

@harrison I’m curious - and I ask this admitting that I have only the most tenuous grasp on the technical stuff - but if I’m reading this right:

The dataloss warning was added Nov 2014 (after your server was setup)

The files started being stored there in March 2016

Shouldn’t somebody have seen that warning file and notified you before you started storing stuff there??

@Bingo RTFM?

@Bingo the dataloss warning was added to the code that sets up servers. our server was already set up.

I was just explaining virtual boy, mario tennis, and red alarm.

@Pantheist Have you ever heard of Virtual Valerie?

I find it interesting that Google doesn’t return any hits to it, tho it does find references to its sequel, Virtual Valerie 2 (NSFW)

@baqui63 no, but I’m about to find out…

I just checked my meh button clicks and quite a few are missing. I did have a perfect click record. Pls fix. Thx.

@miko1 Clever.

Did it work?

@LaVikinga Not yet. That’s weird. I have always found meh customer service to be on fleekpoint. They must just be unusually busy.

I’d apply but I don’t want to move.

All my clickety statistics are fine and dandy.

Sorry about drive. Hard lesson to learn, but at least there were backups for most of it. Would you like a little help from Simon Travaglia? Clickety-click.

Whenever I see anything in /mnt on a production server I assume somebody plugged a thumb drive in the wrong place.

The “us humans never learn” part:

This might have been answered before, but I’m curious as to the advantage of using Azure over AWS. I’m not well versed in this area and am curious.

@DanielTheNerd So, this is probably the definitive post on the topic: https://mediocre.com/forum/topics/one-does-not-simply-run-node-js-on-microsoft-azure Otherwise, leave a question for @Shawn and he might answer it for you.

@harrison thank you for the ohshit report! I don’t like it when the report hits the fan on you guys. But ya know… It’s great to see you guys are human too!

@sohmageek

You think they’re human? You’re quite the optimist!

@f00l you talk not just like???

@sohmageek i resent the implication that i am a mere, powerless fleshsack

@harrison fleshsack? really…

@sohmageek look i don’t want to cramp hk-47’s style

@harrison me thinks you are trolling me now…

Are you sure the Virtual Disk was not stored as a vmdk and moved with the machine in the folder?

Have you guys embraced virtual teams and remote workers yet or are you still all “You have to come live in blistering heat”?

@mikey we’ve never forced anyone to live in Arizona