How we built the realtime referral stats graph for meh.com

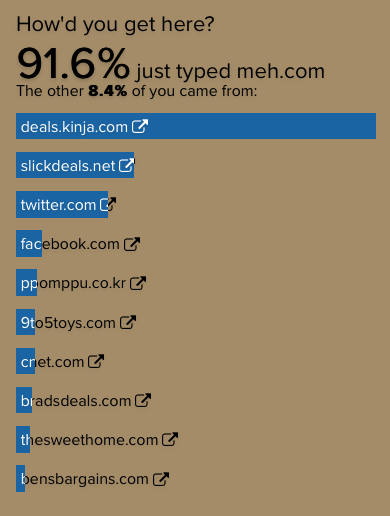

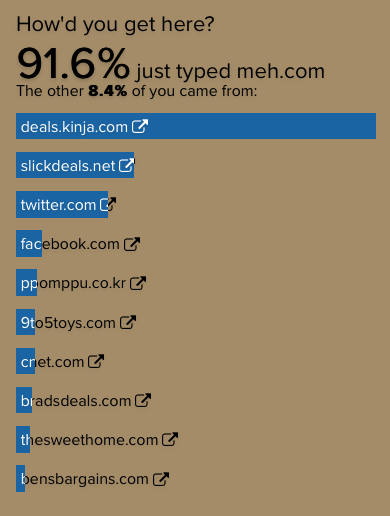

13We recently launched a new referral stats section that shows how people are arriving at meh.com each day.

Thought it would be worth a post to share how we built it, what technology we used, and what we learned along the way in case there are other sites out there willing to surface their realtime referral stats.

Step 1: Data Collection

Since we launched meh.com, we've been logging every website request to a time series database. We chose InfluxDB based on its excellent write throughput and SQL-like query language. We've had some issues with read performance that we've worked around with caching, but keep in mind we've put millions and millions of website requests into this thing.

Meh.com is a Node.js app. The most popular InfluxDB module for Node.js only supports HTTP connections to InfluxDB. Since we're logging millions of requests we wanted something closer to the metal. InfluxDB supports writing data using UDP but we couldn't find a Node.js module for it.

We wrote influx-udp and open sourced it. You can find the code at GitHub or install it at NPM.

On every website request we have some middleware that sends details about the request to InfluxDB using influx-udp. It's fast and has a minimal effect on performance. Looks something like this:

var influxUdp = new InfluxUdp({ host: process.env.influxHost, port: port });

influxUdp.send({ request: {

method: req.method,

referrer: req.headers.referer,

session: req.sessionID,

url: req.url

userAgent: req.headers['user-agent'],

device: detectDevice(req.headers['user-agent']) }

});

Another interesting thing to note is that we're using express-device to help us determine if the request is coming from a desktop browser, mobile device, tablet, or a bot.

Step 2: InfluxDB Query

Once we've collected the data into InfluxDB we needed to query the referrer data. We used the standard influx module for this which retrieves InfluxDB data over HTTP.

It's a pretty simple query:

SELECT referrer, COUNT(DISTINCT(session)) FROM requests GROUP BY referrer WHERE time > {start} AND time < {end} AND device <> 'bot'

This gives us a count of all the requests (excluding bots) that visited from each referrer in a specified range of time. The DISTINCT(session) part helps us avoid counting the same referrer more than once for the same user session.

This gives us back some data that looks like:

[

{ "count": 1234, "referrer": "http://daringfireball.net/linked/2014/11/07/meh" },

{ "count": 1000, "referrer": "http://slickdeals.net/f/7816369-looney-tunes-8gb-usb-2-0-mp3-player-8-00-s-h-meh" },

{ "count": 999, "referrer": "http://www.cnet.com/news/five-deal-friday-ooma-beats-gaffigan-and-more/" }

]

This query is taking about 1-2 seconds to run, so we cache it in Redis for about 5 minutes using the petty-cache module we wrote.

Step 3: Data Visualization

The data that comes back to us has a lot of noise in it. We see a bunch of search engines, feed readers, referrers that don't look like URLs at all, and URLs that are effectively the same but vary by subdomains (domain.com, www.domain.com, m.domain.com). Before we're able to render a pretty graph we need to clean up the data a bit. There's essentially three phases to the cleanup.

Ignoring URLs. We found four patterns of URLs that we simply remove from all calculations. The first is obvious, we remove any URLs from meh.com to prevent any self referrals. Then we remove sign ins from Amazon or Google since we allow signing in to your Mediocre Laboratories account through these two sites. We also remove any referrals we see from mediocre.com sign in pages.

Hidden URLs. We're left with the URLs that we want to count as referral traffic, but that doesn't necessarily mean we want to show them all. Some of the URLs we've found just aren't that useful so we have a blacklist for things like search engines, feed readers, webmail clients, URLs that redirect straight back to meh.com. It's a short list and you'll find it down below.

URL mappings. We learned that we should automatically treat HTTP and HTTPS as the same thing when grouping referrers. We also automatically treat domain.com, www.domain.com, and m.domain.com as the same thing. And then we also built a short list of similar patterns based on the data we were seeing.

All of this cleanup ends up being a few collections of RegEx patterns that look like this:

ignored = [

/^https?:\/\/(www\.)?amazon\.com\/ap/,

/^https?:\/\/accounts\.google\.com/,

/^https?:\/\/(www\.)?mediocre\.com\/account/,

/^https?:\/\/(www\.)?meh\.com/,

];

hidden = [

/^https?:\/\/mail\.aol\.com/,

/^https?:\/\/(www\.)?bing\.com/,

/^https?:\/\/(.*\.)?search\.naver\.com/,

/^https?:\/\/newscentral\.exsees\.com/,

/^https?:\/\/(www\.)?feedly\.com/,

/^https?:\/\/(www\.)?flipboard\.com\/redirect/,

/^https?:\/\/(www\.)?google\./,

/^https?:\/\/(.*\.)?lazywebtools\.co\.uk/,

/^https?:\/\/(www\.)?tedder\.me/,

/^https?:\/\/pipes\.yahoo\.com/,

/^https?:\/\/(.*\.)?search\.yahoo\.com/

];

mappings = [

{ key: 'appleinsider.com', match: /^https?:\/\/(.*\.)?appleinsider\.com/ },

{ key: 'bensbargains.net', match: /^https?:\/\/(.*\.)?bensbargains\.net/ },

{ key: 'dealnews.com', match: /^https?:\/\/(.*\.)?dealnews\.com/ },

{ key: 'facebook.com', match: /^https?:\/\/(.*\.)?facebook\.com/ },

{ key: 'ppomppu.co.kr', match: /^https?:\/\/s\.ppomppu\.co\.kr/ },

{ key: 'reddit.com', match: /^https?:\/\/(.*\.)?reddit\.com/ },

{ key: 'twitter.com', match: /^https?:\/\/t\.co/ },

{ key: 'twitter.com', match: /^https?:\/\/(.*\.)?twitter\.com/ },

{ key: 'twitter.com', match: /^https?:\/\/(www\.)?twitterrific\.com/ }

];

From this point on things get pretty straightforward. We group all the referral URLs by domain, calculate their percentages, show the top 10 in a bar graph, and link to the most frequent URL we've seen from that domain.

Thanks to @harrison for building all of this for us. I think it turned out great.

- 3 comments, 7 replies

- Comment

Very cool to see the coding behind it. Nicely done.

i have no idea what you just said. however, if you look at all the \ and / just right, they look like they're dancing.

@carl669 \o\ /o/ \o/

@harrison please note that attempting to use the previous reply in code will result in a syntax error

@harrison Now that looks like people drowning

@carl669 - I agree:

@lichme @harrison @KDemo

@carl669 - Oooh, the macarena! I was sure you meant stick dancing. I'm sure it's your fault I got it wrong.

@KDemo The weirdest thing is that I've seen that video before. I think I was looking up a song lyric that mentioned Morris dancing.

Hey. Maybe you should merge @lichme 's apps (the two stalkers) into a single thing.

The "(.*.)" looks like boobs (much more so in the font and context above).

Also, http://xkcd.com/1513/